Introduction to ChatGPT Prompt Engineering

The potent language model created by OpenAI, ChatGPT, has attracted a lot of attention for its capacity to produce human-like responses in conversational contexts. However, to achieve optimal performance and mitigate potential biases, prompt engineering plays a crucial role.

Here, we go into the universe of ChatGPT brief planning for engineers. We investigate ChatGPT’s establishments, handle the worth of brief preparation, and find how to plan productive prompts. We additionally give assessment techniques, instances of viable applications, and best practices to assist designers with taking advantage of ChatGPT’s quick designing capacities.

- What is ChatGPT?

The amazing ChatGPT language model, created by OpenAI, can produce human-like responses in conversational contexts. It can comprehend and produce meaningful and context-relevant messages because it has been trained on a massive amount of text data.

- The Role of Prompt Engineering in ChatGPT

The behavior and responses of ChatGPT are significantly shaped by prompt engineering. By giving the model specific guidelines and models, designers can impact it to accomplish the ideal regulatabilities. Prompt engineering helps ensure that ChatGPT understands user inputs accurately and responds in a way that meets the user’s expectations.

Prompt Engineering Markdown Examples

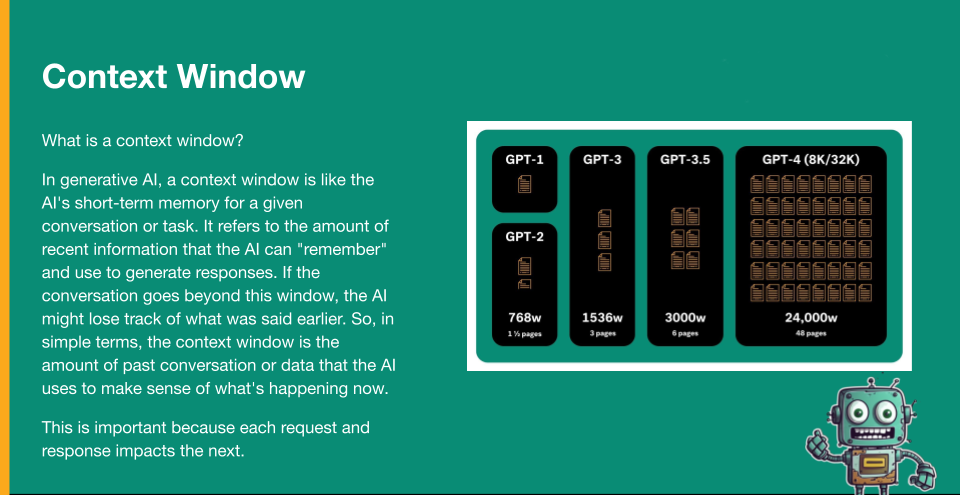

In terms of generative AI, a context window is essentially your short-term memory throughout the conversation(s) you’re having with the tool.

These can therefore be fairly substantial in GPT-4. It can be up to 48 pages long, or 24,000 words, or 32,000 tokens. They also have a larger version of it that isn’t yet generally accessible but is still a very large context window that may be used in these circumstances. This is crucial because if you stay in the same chat session while changing topics, for example, everything you requested and received replies for up front will affect the responses you receive afterwards. You’ll want to start fresh chats whenever you start new subjects and conversations when using these tools.

Context Window – LLM (Large Language Model) Memory

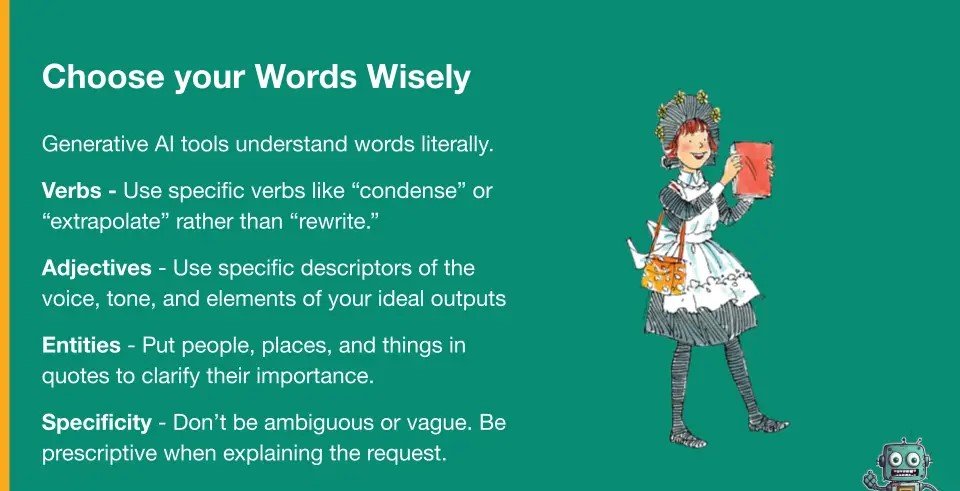

You should therefore choose your words carefully. Like Amelia Bedelia is a fictional character from children’s books, and if you’re familiar with her, you’ll know that she takes everything very literally—just like ChatGPT does. In order to achieve your goals, you must be very careful in the words you choose.

Make sure you use precise verbs rather than “rewrite,” such as “condense” and “extrapolate.” As soon as you say “rewrite,” it will ask if you want to keep the length of the text the same. Would you like me to repeat what I said earlier? It determines what should be done. However, if you say “condense,” it will sum up; alternatively, you may use the word “summarize” as an example. The word “extrapolate” could also be used to mean “cool, add more to this based on what’s there.” It’s crucial to use precise verb tenses when using certain verbs.

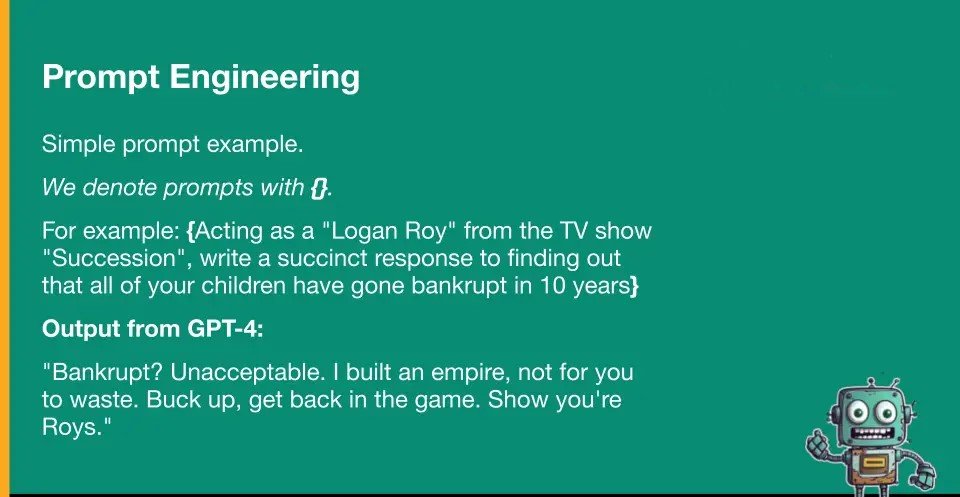

Adjectives should be avoided, especially when considering your content’s voice and tone. Use those descriptors as precisely as you can. Then, entities—any persons, places, or things—are what I like to put in quotation marks to make them more clear to the language model.

“Hey, I’m talking about this particular thing,” for example. Because there are instances in which, for example, if I said, “Logan Roy Rogers,” it might have assumed that I meant Roy Rogers, who can be both a person and a restaurant. Thus, putting “Logan Roy” in quotation marks makes it apparent that I’m referring to a particular object.

Finally, specificity. It is imperative that you provide as much detail as you can. Unless you want it to be creative, don’t be ambiguous or imprecise.

LLM’s(Large Language Model) Literal Understanding of Language

A prompt’s anatomy, or at least the basic structure of the prompts that AIPRM and many other members of our community use, looks something like this. And it’s not particularly important what sequence these things are in. When posting it to ChatGPT, you simply want to make sure that everything makes sense.

So who is the GPT making the advertising for?

Is it a person, or is it a certain line of work?

Just be very detailed in your response. The context of its answer will be constrained as a result.

Then there is the broader background.

Where is this individual?

For whom are they writing?

Do they produce books?

Is the person chatting to the plumber about clogged toilets at their house?

Describe the situation to the person who is supposed to reply, whatever it may be.

The guidelines:

What are you hoping they will do?

Do you require them to write anything specific and in a particular order?

Do you want the stuff they’re sending you to have certain headings?

Do you prefer long or short paragraphs when they write for you?

Do you want them to take factors like confusion into consideration so you can fool an AI detector?

These directions must be baked right in.

The format: that will also influence how it replies.

Is it intended to be tweeted?

Is that supposed to be a book chapter?

Will it be posted to a blog?

Be very explicit about the format you’re considering there because doing so will either limit or increase the quantity of words the response contains.

If you have samples, that would be ideal because if you show them to them, they can imitate exactly what you want. To it, not to them. Although it’s not necessary, it is the fastest route to achieve your goals.

Finally, be mindful of any restrictions: Be careful to include any restrictions, such as the prohibition of technical jargon, a specific voice or tone, or a particular style of message. It will become automatic as you compose your prompts in this format.

I now always begin writing a prompt with my role, which will be something like “New York Times bestselling author,” since you would assume it would make for some fairly good writing.

A major source of worry is that the writing may be superficial or overly simple. To come closer to the product I desired, I tell writers, “Write something with a lot of technical detail” or “Write something with whatever constraints I require.”

Understanding the Basics of ChatGPT Model

- Overview of the ChatGPT Architecture

ChatGPT is built upon the powerful foundation of the GPT (Generative Pre-trained Transformer) architecture. It makes use of a transformer-based model to capture the links between words and produce responses that are cogent and appropriate for the situation.

- Key Components of the ChatGPT Model

The ChatGPT model consists of several components working together to enable conversational capabilities. Alongside an encoder that processes approaching messages and a decoder that produces reactions, it likewise includes consideration instruments that assist the model with seeing the specific circumstance and make reasonable reactions.

Prompt Engineering is Important for Developers

- Enhancing Model Performance through Effective Prompts

Effective prompt engineering can significantly enhance the performance of ChatGPT. By nicely creating the prompts, designers have some control over the model’s answers to compare with specific use situations. A better user experience is provided by well-designed prompts that enable ChatGPT to generate more precise and context-aware responses.

- Addressing Limitations and Biases with Prompt Engineering

Prompt engineering also allows developers to address limitations and biases that may arise in the ChatGPT model. Developers can prevent problems and guarantee fair and impartial interactions with consumers by explicitly telling the model to avoid specific patterns or biases.

Designing Effective Prompts for ChatGPT

- Defining Clear Goals and Instructions

Clearly stating objectives and instructions is crucial when creating prompts for ChatGPT. Clearly communicating the desired outcome and providing explicit instructions helps guide the model’s responses and ensures that it understands the user’s intent accurately.

- Structuring Prompts for Desired Responses

Structuring prompts in a way that encourages specific responses is another key aspect of prompt engineering. Developers can affect ChatGPT’s output and make it provide more pertinent and meaningful replies by taking the desired responses into account and arranging prompts accordingly.

- Leveraging System and User Messages

Prompt engineering can be further enhanced by leveraging system and user messages effectively. System messages can help set the behavior or context of the conversation, while user messages provide input for the model to respond to. Using these messages wisely can result in more interesting and dynamic interactions using ChatGPT.

Utilizing the force of brief designing, engineers might modify ChatGPT’s way of behaving and yield, making it an adaptable device for a scope of utilizations and guaranteeing pleasant cooperations among clients and the model.

Techniques to Improve Prompt Engineering

Training chatbots like ChatGPT requires prompt engineering to make sure they deliver precise and useful responses. Here are a few techniques developers can employ to enhance prompt engineering:

- Pre-training and Fine-tuning Strategies

It’s crucial to carefully consider the pre-training and fine-tuning procedures in order to maximise ChatGPT’s performance. TThis involves choosing the proper datasets, subjecting the model to relevant data training, and then customising it for certain tasks or domains. Iteratively refining the model through multiple training iterations can improve its understanding and generate more accurate responses.

- Data Augmentation for Prompt Engineering

In fast designing, information expansion procedures can be useful. By adding more various prompts to the planning dataset, designers can open the model to a greater combination of wellsprings of information. This forms its ability to deal with a broad assortment of client requests, making it more grounded and situation adaptable.

Best Practices and Guidelines for ChatGPT Prompt Engineering

While there are certain technical parts to prompt engineering, there are also best practises and principles that can help developers get the most out of their work. Here are some key practices to keep in mind:

- Crafting Contextual and Specific Prompts

To elicit accurate responses, prompts should provide sufficient context and be as specific as possible. Clearly describe the desired outcome or the information you are seeking from the chatbot. Avoid ambiguous or open-ended prompts that may lead to inconsistent or incorrect responses.

- Balancing Openness and Control in Prompts

Tracking down the ideal harmony between permitting the chatbot artistic liberty and keeping up with command over its reactions is significant. In order to control the behaviour of the model without completely confining it, developers can employ strategies like system messages or user instructions. As a result, the communication experience is more controlled and trustworthy.

- Avoiding Implicit Biases in Prompts

You should be aware of any implicit biases that might creep into the prompts. Uneven inquiries can make uneven answers, which could add to the multiplication of negative speculations or mixed up information. Review prompts for any unintentional biases and aim for neutrality and fairness in the conversations.

Evaluating and Iterating on Prompt Engineering Approaches

Prompt engineering requires iteration and ongoing evaluation.. Here are two important steps in refining prompt engineering approaches:

- Monitoring Model Outputs and Feedback Loops

Regularly monitor the outputs generated by ChatGPT and evaluate their quality .Watch out for any comments that are inconsistent, inaccurate, or biassed. Make criticism circles to get client input and distinguish potential improvement regions. The capabilities of the model are enhanced and any immediate flaws are discovered through this iterative process.

- Collecting User Feedback for Prompt Iteration

Prompt engineering must be improved with user input. Client info and thoughts can be integrated into the iterative interaction to work on the model’s reactions and the prompts. Actively seek feedback from real users and leverage their input to make ChatGPT more effective in understanding and addressing their needs.

Leveraging ChatGPT Prompt Engineering in Real-world Applications

ChatGPT’s functionality in a number of practical applications can be greatly enhanced through rapid engineering. Several examples are provided below:

- Using Prompt Engineering for Customer Support Bots

By merging feasible prompts, client care bots can help with streamlining discussions and give exact answers for typical requests. Customers can be guided during the conversation and encouraged to ask questions in a way that leads to accurate and beneficial answers by using well-designed prompts.

- Enhancing Virtual Assistants with Effective Prompts

Prompt engineering can help virtual assistants by enabling them to give more precise and contextually aware responses. Virtual assistants can provide personalised support and more fully comprehend user needs by designing prompts that highlight certain user intentions or preferences.

- Incorporating Prompt Engineering in Chatbot Development

Prompt engineering should be an integral part of chatbot development. By mindfully planning prompts, designers might control the discussion’s stream, work on the exactness of reactions, and give a consistent client experience.

Cautious brief designing assists with making chatbots that meet client assumptions and give significant discussions. To sum up, it takes expedient planning to use ChatGPT’s characteristics in conversational applications. By grasping the model’s hidden suspicions, planning viable prompts, and following exhorted rehearses, designers can upgrade the responsiveness and constancy of their ChatGPT-fueled frameworks.

Prompt engineering methods must be improved by constant assessment, iteration, and user input. Developers may fully utilise ChatGPT and build more interesting and genuine dialogues in a variety of areas, from customer care bots to virtual assistants, with the help of a well-written prompt. For developers to provide the best performance and user experience in their apps as ChatGPT develops, prompt engineering will remain a crucial skill to acquire.